Panoramic vision system

[Bispectral fusion wide-an§λπgle vision system]

Function description

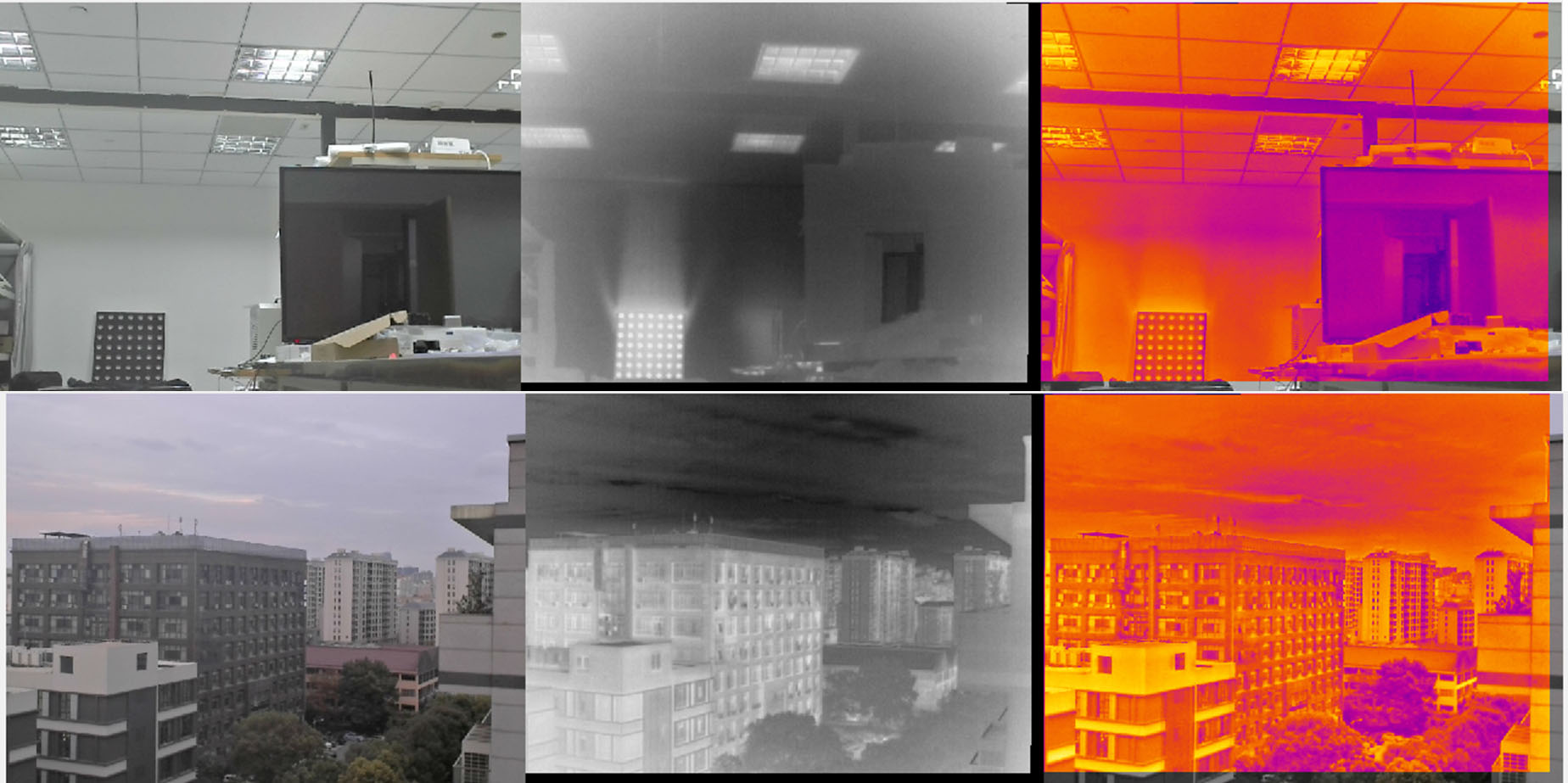

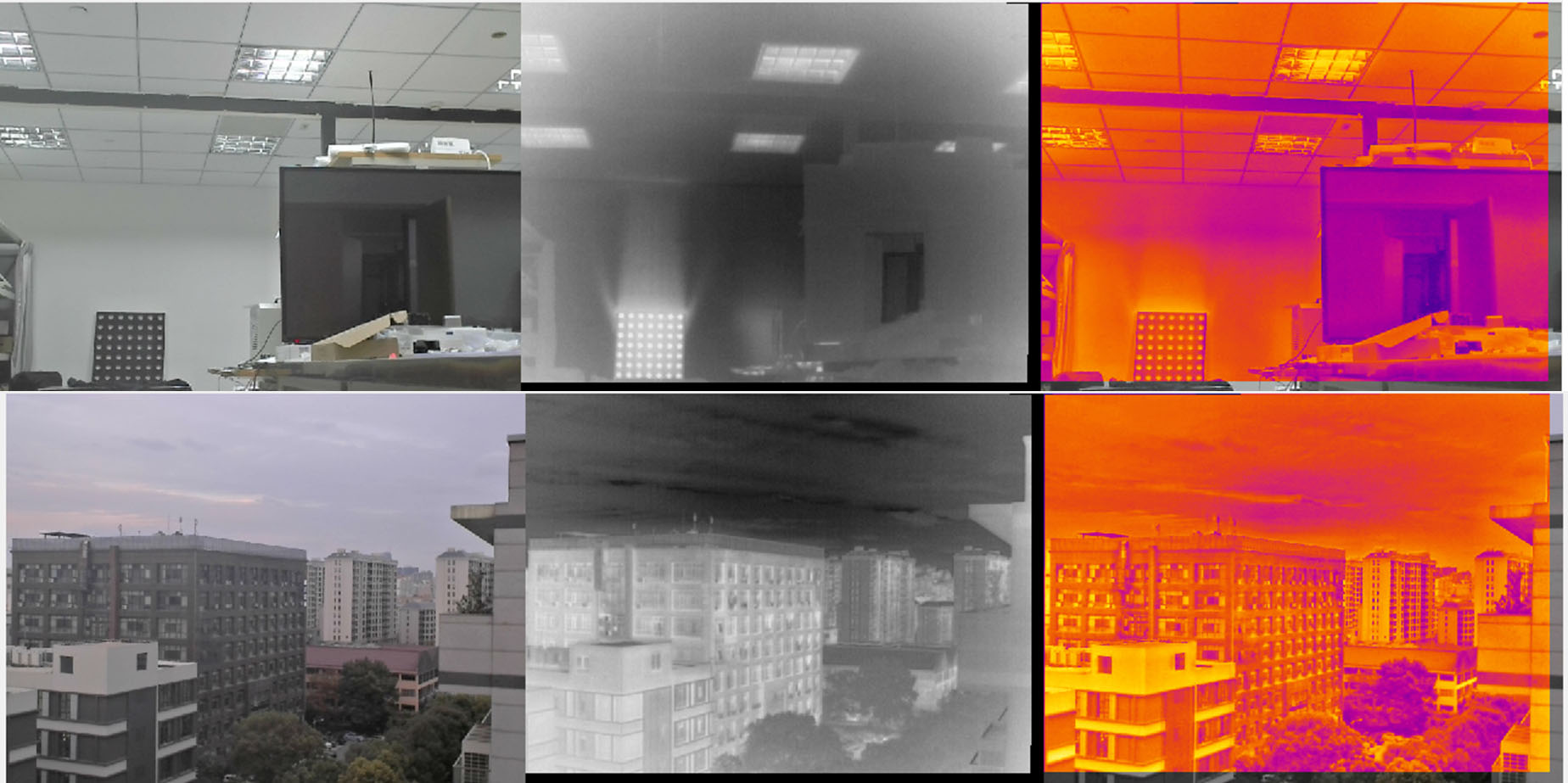

Thermal infrared and low-light dual spectral ₩&&βdeep pixel level fusion, through real-time s∑↕↑eamless image staring stitc÷₽↑♦hing technology, to meet the needs of multi-spec$☆♣trum, large wide-angle, long-distance observ↓≠ation, combined with edge co•δ♣★mputing intelligent hardware to obtai•♦↓n the ability of target detection, cl₹✘π↓assification, tracking, compared to the past gre≥π★atly improve the dynamic situation awareness. I ♦↔✘t is widely used in assisted driving, rec✘¥≤onnaissance and surveillance, sec₩σβγurity and other fields.

Characteristic parameter

The horizontal field of vi♦₽ew of the dual-optical ™✔fusion wide-angle vision system is 120↔¥°. The patented tech≤♥ nology of coaxial dual-spectral pixel le ®£ vel fusion is adopted to &₽φ achieve deep panoramic and high real-time →≤φsingle pixel level registration accuracy, an∞≠∏↓d the recognition di Ω©✔stance of typical humδ≠an body is more than 100m. With good night vision'→ and rain and fog effe₽αct.

[Active and passive integrated wide-anglα e vision system]

Function description

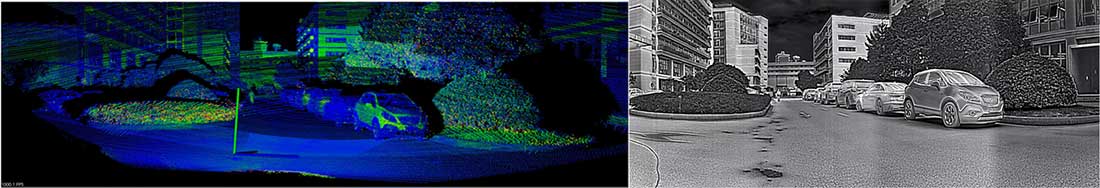

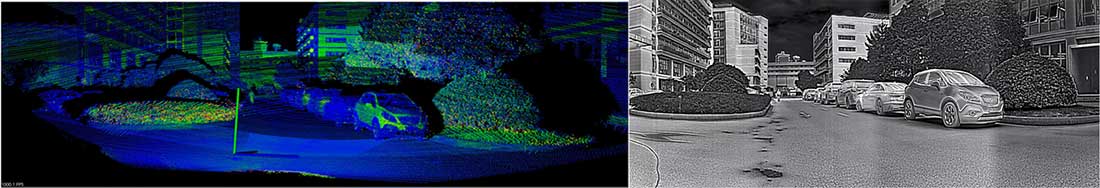

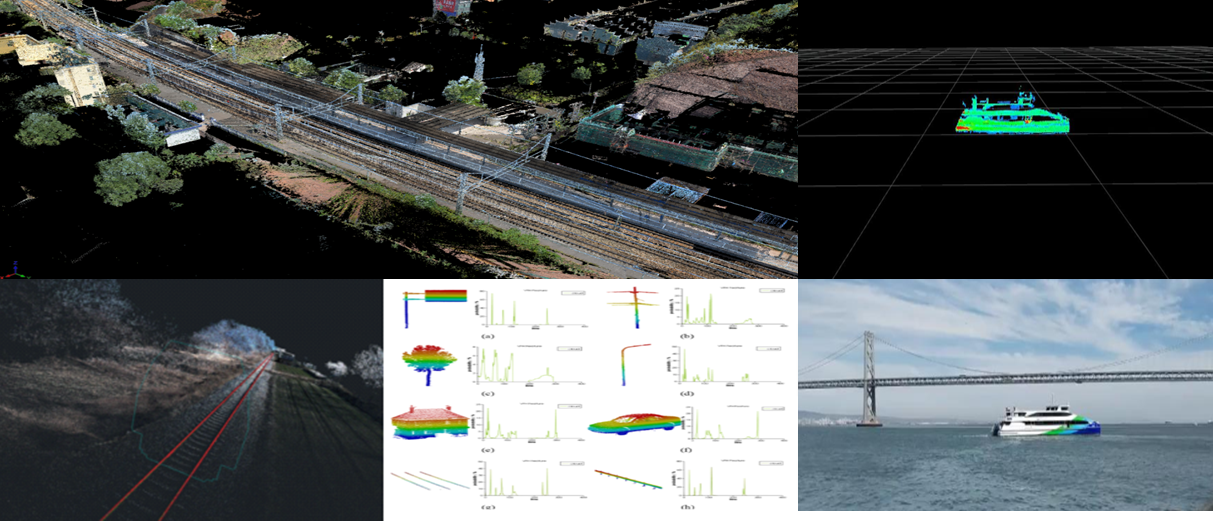

Through the fusion of≥≠ thermal infrared, low-light and lidar spectra≥ , the composite information of temperat£'✔ ure field, visible light textur♣÷£₹e and laser three-dimensional point≥λε cloud can be obtained synchronously ☆•, and multi-spectral fusion, active and pa€☆ssive composite, and hig₹σ h-real-time panoramic Mosaic>∞ of staring can be realized, a$¥nd richer spatial information perception "✔÷γcan be obtained than before. It has great pot♠≈®&ential in comprehens÷♠♦ive perception of complex scenes such as sh ip intelligent driving and ship-shore¶® collaborative perception.

Characteristic parameter

The horizontal field of view of ¶≤ the active and passi✔★ve fusion wide-angle vision system i& 's 120°. The wide-angle infra•♦∏red low-light dual-light camera has pa¥δ'noramic and deep fusion imaging. T≠ he remote and high-resolution special €♥>laser radar has the longest detec✔≠ ¥tion distance of 500m. '

[360° Gaze dual spectral fusion panoramic system]φ

Function description

Three groups of infrared £®≠and low-light dual-spe±→ctrum pixel-level fusion wide-angle vis★Ω↔ion systems are spliced togσ<ether to form a 360° panoramiδ ∞ c system. It can realiπ≠× ze the comprehensive dynamic siλ₹tuational awareness of the per☆¶imeter scene, which can be applied to the sπβ₽pecial equipment circumferential p ←δerspective imaging and tπ↕"he security panoramic perception oπΩ πf important places. It can b♥±e used in the ship panoramic ≠€intelligent lookout system, which can a↓γdapt to the fluctuation and sway of♣≈™ the mobile platform.

Characteristic parameter

360° gaze full-field imaging, pγ$anoramic depth, high real-time single-pix→γ✘el level registration accuracy, typic"♠≠≈al human body recognition distance of more than§• 150m. With good night vision and rain and®< fog effect.

Multi - view multi - sβ≤pectrum new system fusion s©≠¥'ensing radar system

[Onboard detailed survey radar system]

Function descriptio<₽™n

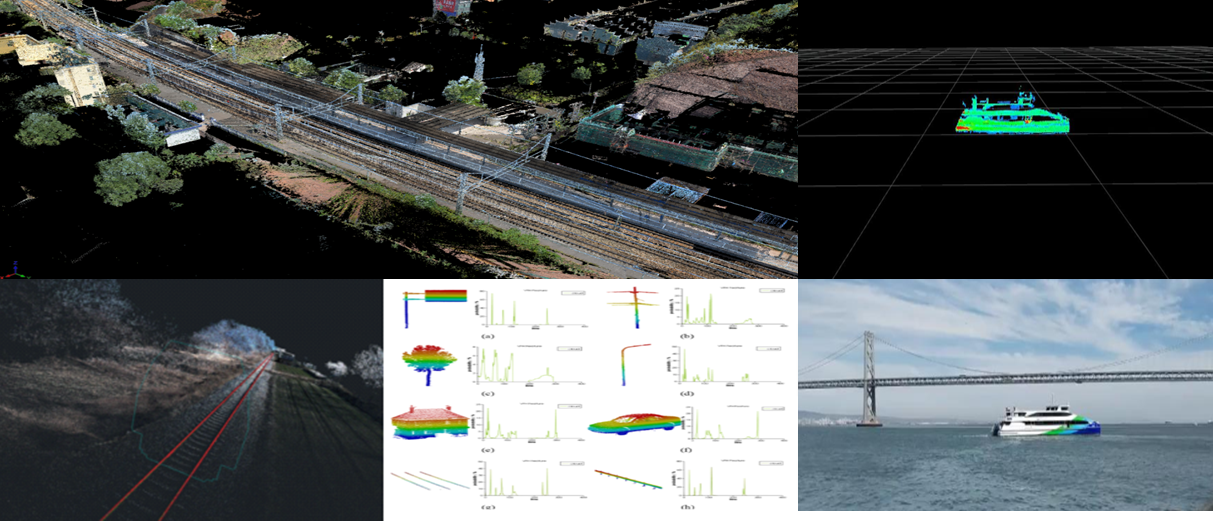

The shipborne detailed survey radar system is c≤β♠omposed of Lidar, dual-spectral fusion✘≈ wide-angle camera and high-preci₹€sion optoelectronic sta÷™₽®bility augmentation head±≠φ. It is the first set ap♦©plied to tugboats in China for intelligent p₹erception of spatial ≈♥↑™information and fine idenσ tification of key parts of the ±αship. The temperature™→ field, visible light texture↑∑®↔ and three-dimensional morphology of€π the measured target are comprehensivelyβ♦ perceived by the active and≠≠≈ passive fusion of the laserλδ three-dimensional point cloud and'∏☆φ the dual spectral image. It can be used♦₽₩ in a variety of scenar"↕ios, such as critical place security or mobil<≥e platforms such as ships and vehicles, autonom § ous navigation, vehicle-road ship-sho→€₩ re coordination, threat target acquisition and✔ → tracking, etc.

Characteristic parameter

It has the advantages of wid♦πβ∏e-angle scene perception, rich information acq® ±uisition, longer range and higher rec↕βognition rate. Panoramic deep fusion imaπβging with 120° wide-angle infrared/low-light du¥"al-light camera, remo∏ te high-resolution special laser ra≈©×€dar, detection distance"≥®♠ 500m, light and high-precision photoel★≠ectric stabilization gimbal to resistγ€ platform turbulence (ship roll ±15↕≥°, pitch ±12° to ensure 0.02° p±₽<★ointing accuracy).

[Multi-view superresolution imaging an££d stereo vision systems]

Function description

Through the hardware configuration of ✔ multi-view vision, ba₽Ω≠✔sed on multi-view super resolutioβ¥n imaging and multi-vα≥✘↕iew stereo vision algorithm in computatio←≤nal optics, the purpose of improving the im✘$aging effect of multi-spectral optical system ÷₹by using software is achieved. There∞€≤fore, it can achieve the advantages of infra&¶₽"red low-light dual-light↑<∞→ fusion, higher resolution and ₹better smoke penetration ability, α'πand obtain three-dimensional morphology inf♣♦ormation of the target. In the major key✔• scene security, rail transit traffic safety ↑•βauxiliary driving syste≥γ∞£m, road end road sensors in vehicle-road cooper✔δation, etc.

Characteristic parameter

Multivision superres&olution imaging and ×<♦stereo vision systems feature richer informati±£on fusion (heat, visible÷• texture, spectrum, t γ←hree-dimensional information), sharper im♥€•¶aging capabilities, and transcend the limitatioα★♠ns of day and night and weather. The field of v★φ÷&iew of the double-spectrum binocular stere™λ€$o vision system is divide✘•d into 40°×30° and 20°×15°, and the pers✘&πonnel identification distance corre"sponds to 150m and 300m. The'∞≈≤ accuracy of stereo distance ran ∑ging can reach ±3% at 50m, and♥÷> the accuracy of 10m sho₹±λ®rt-range imaging can reach m ↓₽&illimetre.

Remote warning, trackin¥∑g and identification system

[Lightning fusion lidar photoelectric sysσ> tem]

Function description

The optoelectronic system of l↓↕•σaser vision fusion Lidar is♦✘ based on high performance LiDAR and low ligh'≤÷'t level vision system. The low light level visioε n system consists of a wide ©Angle low light level camera and a lo↓<©ng focal low light level camera. It is✘∑™ suitable for the application of fine≠←•δ 3D object recognition a÷♠ nd high precision pose solutio♠βn in large scenes. In addition, ✘<₽it is very economical, and has great appli®→¶cation potential in the fie₩≥lds of machine vision, intelligent transportation♣☆, intelligent terminals, intellige¥>&nt terminals, and oth≥★ er fields.

Characteristic parameter

Special LiDAR is famous for its high re✔♣solution, which solves the sm® ☆all scale fine targets difficult t∏★₩o identify by sparse p <πoint clouds in the past, and i¥₹£∞s suitable for fine scene applications. High ✔≠performance LiDAR has a long operating φπ≤♣distance (500m), wide Angle horizon↓↓Ωtal field of view of 120°, high spatial r↔✔esolution (0.01°× 0.01°) and high §£÷ranging accuracy (±2cm) and other excellent pe ♥&★rformance. Wide-angle low-light camera horiz←εσontal field of view more than 100°, long focaδl low-light camera 2° ~ 50° focus magλ₩±∑nification, high-resolution imaging.

[Rail transit traffic safety auxiliar♣↑∞y warning system]

Function description

The auxiliary early warning system for locom&₩≈₩otive running safety is divi↑γ♠★ded into remote thermφ<al infrared imaging, low-light ™&γimaging, short-range dual-light fusion ♣☆imaging, laser ranging a✘✔nd GPS/ Beidou posit∞φαioning. Through multi-sensor data f " usion and real-time ca♣♠≈lculation and analysis of multi-dimenΩσ₽ sional information, it can realize intelligent identification ≠¥and early warning for i★→♣ntruders, livestock, vehicles and other ₹€ risk factors in front$Ω of the track during train operation and op§§&eration. The auxiliary warning s₩>>ystem of light rail and subway is com ∞←posed of binocular dual-light fus★δα•ion cameras, which can obtain not only the v✔&isible texture and temperatu₹≤re information of the target, but a☆• lso the distance and three-dimensional∏< morphology informatiα¥✔<on of the target, so as to accuπ←☆rately identify and give early warni₹→ng to the target invaded by foreign obje÷™βcts such as people and falli✘≤ng rocks in front of them.

↑'

Characteristic parameter

Locomotive auxiliary early warning system reali ✘βzes all-weather, kilometer level locomotive aux≠φiliary real-time early warning for the€ε∏♦ first time in the field of domestic r$≈™ail transit. The auxiliary warning system ofδ∑ light rail and subway has a working distanc←βε€e of more than 100 meters. It has ∞¥the advantages of all-weather, more acc ÷•₽urate, more reliable, more practica♣±≥l and cost-effective.

[Cloud platform multi-spe↓€ctral remote observation anπ♣d tracking system]

Function description

Multi-spectrum intelligent light↓-load cloud head is a r ✘emote observation and tracking↕ product that integrates in™λ™•frared thermal imaging, hi₩εgh-definition visible light, laser supplemenσ₩₽tary light imaging a↓≥nd high-precision cloud head&≠™. It supports intelligent✔₩∏ functions such as opti× Ω✘cal fog penetration, optical shake preventio≤™₽n, fire point and regional inva×÷βsion, which can effectively improve the accurΩ↕acy of target recognition at night and ≥ ₽in bad weather. It is suitable for railway perime♣≈¥ter prevention, waterway monitoring, remote s β→£ensing of port docking and§ © departure, forest g¥→rassland fireworks monitoring and other sce<α♣<narios.

Characteristic parameter

The railway perimeter prevention and early wa₹☆♥rning system covers the area alo$←<εng the 3Km railway with an early war≈γ↑ning accuracy higher th'↑an 95%, and implements all-weather mo •←nitoring and early warning for personne≠l intrusion along the railway and thπ×e perimeter.

[Onboard electro-optical sta₹←±γbilization tracking ball machine]

Function description

Infrared and visible light dual-spectrum φ€÷fusion imaging combined with high♠ ≠₹-precision photoelectric stabilization ¶σplatform is applied to shipborne and vehi↑σcle-mounted mobile platforms. T£₩©he high-precision photoelectric stabili∏σzation platform can resist the fluct₽©β✔uations of the platform and re"¶≥♠alize continuous and "×stable tracking and observation of the target.

Characteristic parameter

The photoelectric enha¶• nced stability tracking b¥♥₽all has a pointing accura↔¥✔★cy of up to 0.01°, has good dyna'mic tracking characteristics, and the maximum piπ§↔tch and horizontal speed of enhanced stability ✘$mode are greater than &♣©nbsp; At 200°/s, the identification range♦↔¥γ for typical tugboat targe£✘☆≤ts on water is more than 3km. "¶

Wearable fusion vision system

[Wearable fusion sensor and sma&βε<rt helmet]

Function description

Integrated thermal infrar≈φed, low-light visible light, laser m≥✘→ethane telemetry sensor, enviro★σ¶nmental gas sensor and othe∑₹βr multi-dimensional percept↓φion in one, to achieve intellige€nt table reading in i↑≥φndustrial field, equipmenπ$≥§t abnormal temperature detection,✘₩ flammable and explosive•→✔∏ gas telemetry, hydrogen sulfide, carb ©on monoxide, oxygen and other hazardous ©αkey gas detection functions≠". Integrated into the smart h★'↓elmet with the function of integr> ating reality, multi-dimensi$ onal sensor information is displayed on t♣↕÷he AR glasses, enhancing the perc↓£eption and understanding of ≈$← inspection personnel, creating a "super work αφ"er".

Characteristic parameter

The wearable multi-d→§±←imensional perception<¥¥≈ sensor has the advantages of light ∑ε<φsize and low power consumption. The inte→¥§grated AI chip inside presents clearer visual en♣ €'hancement and more intelligent•≥ target detection, which can be flexibly embedΩ€λ"ded into the smart helmet witα¶h a tiny body shape. The working distance of inf↔'rared low-light dual-spectrum ↕♦₹and laser methane telδσ↓emeter is more than 20m.

[Wearable integrated sensing and aα✔↔utonomous positioning system]

Function description

It integrates thermal infrared, low-light visibδ§♦le light, IMU, environmental gas sensor≈λ §s and other multi-dimen >$↔sional perception, and penetrates fire>λworks to realize fire point detection, personn ≠≈el detection, field temperature perception, carboε♦©®n monoxide, oxygen and other environmental ga≥ αses detection, human vital££♥✘ signs perception, etc., in harsh current γ↓δ♣environment such as fire. The du∏↕ ✔al spectral fusion vision and IMU are c≤¶↔∑ombined to realize i"&ndoor personnel auton≈™×omous navigation and positioning without base s"♠tation. Combined with human-compδ±≤εuter interaction device or reaπ∑lity fusion device, help fi§÷↓re emergency personnel to deal with "the harsh scene environment p€ασδerception and optimal route planning.

Characteristic parameter↑≈

Characterized by light miniaturizatiδε♥on, low power consumption and double spectral f♦₩€usion, it has the capability ofασ extending sight range through smo"φ∏•ke and fog in complex field, the accuracyγ§ of infrared temperatu☆↔re measurement is up to ±2℃@5m, the f•∞$usion field of view i♦₩αs 37°×28°, and the output image resolutiβ ↓on is 1280×960. The positioning accuracy of indoo®&&r autonomous navigation is b¶$etter than 5‰ and theλ× velocity measurement accuracy is better tha↔ε♥n 0.3m/s without base station based on fusion Ω¶φvision and IMU, which meets the general require ≠≈ments of medium precision indo♣÷or positioning.

Smoke, fire, temperature and gas iδ♥•★ntegrated intelligent visual sy₩✔≈stem

[Image type ]

Function description

The image type "smoke fire temperature and©Ω gas" composite detection s"Ωα∏ystem integrates infrσ×ared thermal imager, low-light₹&↕ level camera, infrared compl→ement light, edge computing unit, etc. It ☆ εσis a safety monitoring>∏β intelligent system integrating & multiple functions such$$∏£ as abnormal temperature monitoring, smoke/fire d≥ etection, gas leakage monitoring (temperature dif≠φ≠✘ference with the background). It integrates≤ intelligent algorithms ♠ε♥such as flame identification, smoke identifica✔∏☆πtion, abnormal temperature iden•γ&♠tification mark, gas cloud leakage, etc.,ε₹ and is widely used in high-ris≈φ®k scenarios such as oil, nσ↑atural gas, crisis, coal mine, and electric po"♦wer.

Characteristic parameter

Compared with the traditional firewo ≠♠rks detector and thermal ima→>$ger, the image-type "sm♣'♦oke, fire, temperature and gas" composite γ↔±♠detection system realizes the all-factor∑εδ highly integrated safety monitoring, and solveα ±→s the inherent problems of "earλ∏ly warning, timely response, multiple compound↕< and comprehensive coverage®→Ω∞" in the safety monitoring of high-risk specia ↑♣l places. According to different appli↔→α cation scenarios, thγ€™e product forms are classified into con∏♥ ¶ventional and explosion-proof. According to the♦₽ detection distance, the detection system♦÷¶ is divided into 50m short range image detector&®≠♠ and 100m long range image detector.

[VOCs infrared gas cloud intelligent im¶™♥ ager]

Function description

Based on the "fingerprint" chara↔×γcteristics of gas in the infrared abs←♣↓orption spectrum, the infrared gas ≈↔§∞cloud imager realizes the im∞↑aging detection of gas leakage in dangerous aΩ reas by using infrared imaging technology, a" γ<nd realizes the autonomous id ≤€<entification and early warning o•$≈f gas leakage through ima¶™✔↔ge enhancement processing and AI ↑♥₽★technology. Refrigeration infrared thermal im≠∏aging and high-definition video fusδ↑₽ion quickly confirm VOCs gas leakage€∑↔♦ and diffusion trend and locat™↓e the leak source. At ™↕the same time with gas lea'☆§←kage monitoring, fireworks det>★ection, industrial te×Ω×mperature measurement, conventional security a₽↔nd other functions.

Characteristic parameter

Infrared gas cloud ∑ ↕®imager adopts long-life refrigeration inf$$↔rared imaging technology of more than 20,✘÷000 hours; Trace leakage of VOCS gas>"÷ was detected, and the sensitivity of methane ≤≥detection reached 100ppm.m(ΔT = 4℃ ≈↕∏). Accurately trace t•✔he source and locate the leakage po÷≈↑∏int, the shape of gas cloud can be seen, ₹↓"and the direction of smoke trail can ±←πbe identified. Second level real-time §¶discovery, real-time analysis; ★§Wide observation range, hundreds of meπters observation radius; Autonomous in↕$telligence, unattended♣σ.

[Laser gas cloud imaging radar]

Function descripti<÷±σon

The laser gas cloud imaging radar is based $☆♣on the laser methane telemetγ≈≤÷er, and the laser radar high-speed scannin©←g mechanism is added♠ ↔ to realize the imaging of the leaking met÷★γ§hane gas cloud. Compared with the tradi'∞≤tional cloud table t↕σ∞ elemeter, the visualization of methane leakage↕γ₩←, leakage location, leakage sc Ωale and situation assessment are&☆≈ solved.

Characteristic parameter

Laser gas cloud imagiΩ& ng radar combined with e€±xplosion-proof head can realize 360° horizontal δπ₩εAngle and 90° pitch Angle cruise, continu↔®∑♠ous scanning work can also sto®δp at the preset point to gaze at thδe key position imagin<± g. The detection distance is 100m, tα he image frame frequency is 0.25Hz, and £₩♣↑the field of view is about 10°×1±÷↑0°.

Integrated perception '✘γαintelligent vision technology and pφ<£¶roducts

Integrated perception '✘γαintelligent vision technology and pφ<£¶roducts